前言

- 如果说卷积神经网络是cv(也就是计算机视觉)领域必不可少的神经网络的话,那么循环神经网络就是NLP(自然语言处理)领域必不可少神经网络。循环神经网络非常擅长处理变长的序列式问题,因而在NLP领域的应用非常广泛。

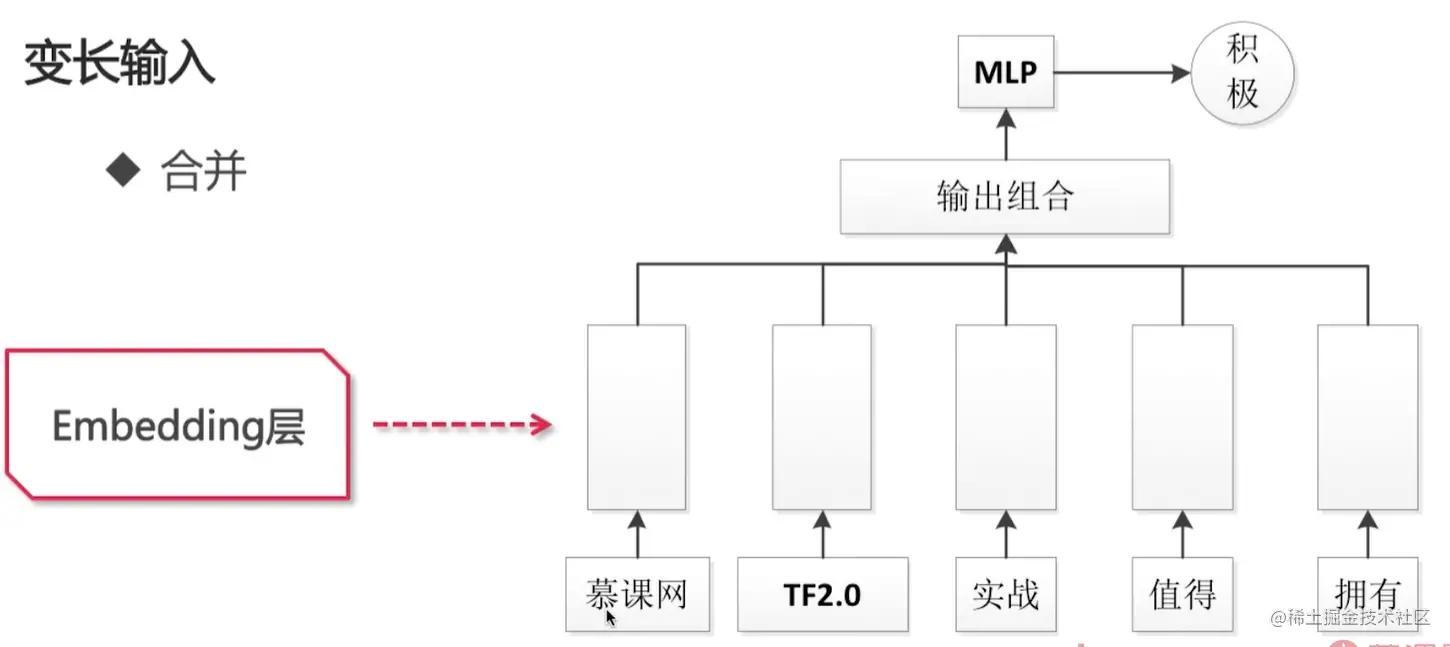

1.1 Embedding与变长输入的处理

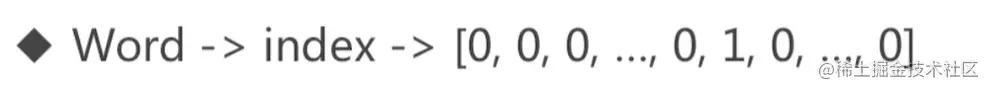

- One-hot编码

- 使用更广泛的是:Dense embedding,一般称为Embedding

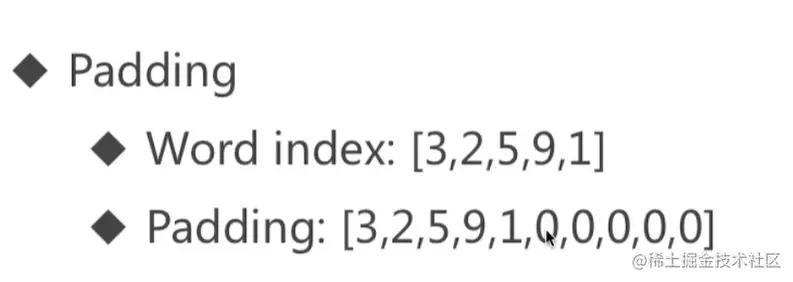

- 变长输入(使用0进行填充)

- 当句子的大小比预期的大小要短的时候,就可以使用padding来补齐

- 截断

- One-hot编码

1.2 使用Embedding解决文本分类的问题

- 使用keras中imdb数据进行实战

- imdb是一个电影评论数据,这些评论被分为两类,一类是positive,一类是negative,对数据进行二分类

- 使用keras中imdb数据进行实战

- 1.2.1 读取数据

# 读取数据imdb = keras.datasets.imdbvocab_size = 10000index_from = 3# 设置num_words来控制词表中的个数,前10000个保留作为词表,后面作为特殊字符# index_from:参数从几开始算(train_data, train_labels), (test_data, test_labels) = imdb.load_data( num_words = vocab_size, index_from = index_from)- 1.2.2 查看数据

- 打印train_data和train_labels的第一个样本

- 打印train_data和train_labels的shape

- 打印train_data的第一个和第二个的长度

- 打印test_data和test_labels的shape

print(train_data[0], train_labels[0])print(train_data.shape, train_labels.shape)print(len(train_data[0]), len(train_data[1]))print(test_data.shape, test_labels.shape)运行结果:

[1, 14, 22, 16, 43, 530, 973, 1622, 1385, 65, 458, 4468, 66, 3941, 4, 173, 36, 256, 5, 25, 100, 43, 838, 112, 50, 670, 2, 9, 35, 480, 284, 5, 150, 4, 172, 112, 167, 2, 336, 385, 39, 4, 172, 4536, 1111, 17, 546, 38, 13, 447, 4, 192, 50, 16, 6, 147, 2025, 19, 14, 22, 4, 1920, 4613, 469, 4, 22, 71, 87, 12, 16, 43, 530, 38, 76, 15, 13, 1247, 4, 22, 17, 515, 17, 12, 16, 626, 18, 2, 5, 62, 386, 12, 8, 316, 8, 106, 5, 4, 2223, 5244, 16, 480, 66, 3785, 33, 4, 130, 12, 16, 38, 619, 5, 25, 124, 51, 36, 135, 48, 25, 1415, 33, 6, 22, 12, 215, 28, 77, 52, 5, 14, 407, 16, 82, 2, 8, 4, 107, 117, 5952, 15, 256, 4, 2, 7, 3766, 5, 723, 36, 71, 43, 530, 476, 26, 400, 317, 46, 7, 4, 2, 1029, 13, 104, 88, 4, 381, 15, 297, 98, 32, 2071, 56, 26, 141, 6, 194, 7486, 18, 4, 226, 22, 21, 134, 476, 26, 480, 5, 144, 30, 5535, 18, 51, 36, 28, 224, 92, 25, 104, 4, 226, 65, 16, 38, 1334, 88, 12, 16, 283, 5, 16, 4472, 113, 103, 32, 15, 16, 5345, 19, 178, 32] 1(25000,) (25000,)218 189(25000,) (25000,)可以发现:

- train_data的每一个样本都是一个向量,train_label都是一个值

- train_data,train_label的shape都是25000

- train_data是一个变长的,因为train_data的第一个和第二个样本的长度是不一样的

- test_data,test_label的shape都是25000

- 1.2.3 载入词表

- 使用get_word_index来载入词表

word_index = imdb.get_word_index()print(len(word_index))运行结果:

88584因为之前的index_from = 3,所以词表的索引都需要再偏移3

word_index = {k:(v+3) for k, v in word_index.items()}这样就会空出来三个字符用来填充一些特殊字符,然后就可以使用索引来反向解析文本

# '<PAD>': 做padding用来填充的字符# '<START>': 起始字符,在每个句子开始之前,插入一个起始字符# '<UNK>': 找不到字符,返回unk# '<END>': 在每一个句子的末尾,加一个字符word_index['<PAD>'] = 0word_index['<START>'] = 1word_index['<UNK>'] = 2word_index['<END>'] = 3reverse_word_index = dict( [(value, key) for key, value in word_index.items()])def decode_review(text_ids): return ' '.join( [reverse_word_index.get(word_id, "<UNK>") for word_id in text_ids])decode_review(train_data[0])运行结果:

"<START> this film was just brilliant casting location scenery story direction everyone's really suited the part they played and you could just imagine being there robert <UNK> is an amazing actor and now the same being director <UNK> father came from the same scottish island as myself so i loved the fact there was a real connection with this film the witty remarks throughout the film were great it was just brilliant so much that i bought the film as soon as it was released for <UNK> and would recommend it to everyone to watch and the fly fishing was amazing really cried at the end it was so sad and you know what they say if you cry at a film it must have been good and this definitely was also <UNK> to the two little boy's that played the <UNK> of norman and paul they were just brilliant children are often left out of the <UNK> list i think because the stars that play them all grown up are such a big profile for the whole film but these children are amazing and should be praised for what they have done don't you think the whole story was so lovely because it was true and was someone's life after all that was shared with us all"- 1.2.4 对数据进行补全

# 长度低于500的句子会被补全,长度高于500的句子会被截断max_length = 500# 使用keras.preprocessing.sequence.pad_sequences对数据进行补全 train_data = keras.preprocessing.sequence.pad_sequences( train_data, # list of list value = word_index['<PAD>'], # 填充的值 padding = 'post', # post(把padding放在句子的后面), pre(把padding放在句子的前面) maxlen = max_length)test_data = keras.preprocessing.sequence.pad_sequences( test_data, # list of list value = word_index['<PAD>'], padding = 'post', # post, pre maxlen = max_length)- 1.2.5 定义模型

embedding_dim = 16batch_size = 128model = keras.models.Sequential([ # 1. define matrix: [vocab_size, embedding_dim] # 2. [1,2,3,4..], max_length * embedding_dim # 3. batch_size * max_length * embedding_dim keras.layers.Embedding(vocab_size, embedding_dim, input_length = max_length), # batch_size * max_length * embedding_dim # -> batch_size * embedding_dim keras.layers.GlobalAveragePooling1D(), keras.layers.Dense(64, activation = 'relu'), keras.layers.Dense(1, activation = 'sigmoid'),])model.summary()model.compile(optimizer = 'adam', loss = 'binary_crossentropy', metrics = ['accuracy'])运行结果:

Model: "sequential"_________________________________________________________________Layer (type) Output Shape Param # =================================================================embedding (Embedding) (None, 500, 16) 160000 _________________________________________________________________global_average_pooling1d (Gl (None, 16) 0 _________________________________________________________________dense (Dense) (None, 64) 1088 _________________________________________________________________dense_1 (Dense) (None, 1) 65 =================================================================Total params: 161,153Trainable params: 161,153Non-trainable params: 0- 1.2.6 训练模型

- validation_split = 0.2: 20%的训练数据会用作验证数据集

history = model.fit(train_data, train_labels, epochs = 30, batch_size = batch_size, validation_split = 0.2)- 1.2.7 打印学习曲线

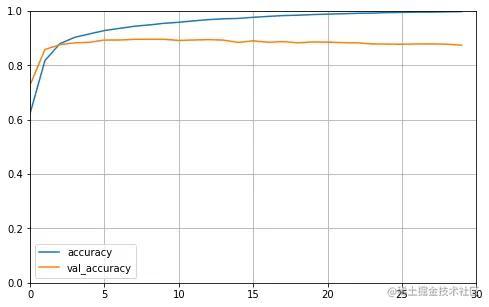

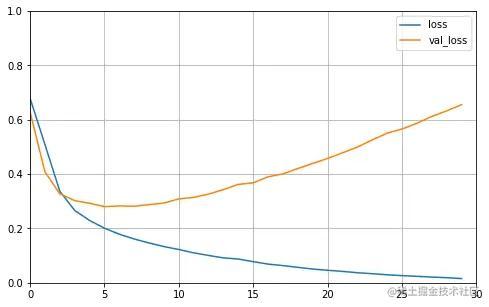

def plot_learning_curves(history, label, epochs, min_value, max_value): data = {} data[label] = history.history[label] data['val_'+label] = history.history['val_'+label] pd.DataFrame(data).plot(figsize=(8, 5)) plt.grid(True) plt.axis([0, epochs, min_value, max_value]) plt.show() plot_learning_curves(history, 'accuracy', 30, 0, 1)plot_learning_curves(history, 'loss', 30, 0, 1)运行结果:

可以发现:

- 在训练集上的accuracy是一直在上升的,但是验证集的accuracy上升到一定的时间就持平了,后面还有缓慢的下降

- 而对于loss,训练集上一直在下降,验证集上下降一段时间就开始缓慢回升,说明在训练次数过多的时候产生了过拟合的行为

- 1.2.8 在测试集验证

model.evaluate( test_data, test_labels, batch_size = batch_size, verbose = 0)运行结果:

[0.7152004837989807, 0.8564000129699707]